Why is There a Need for Low Power Solutions?

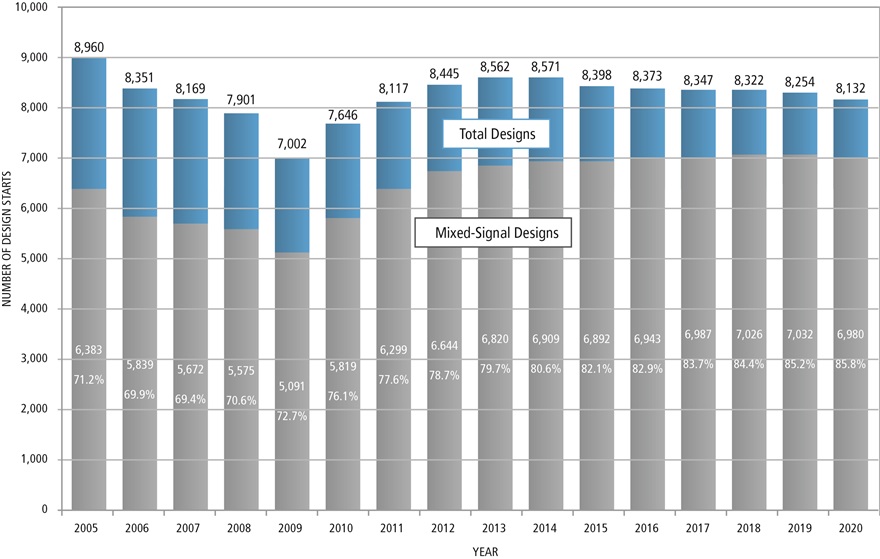

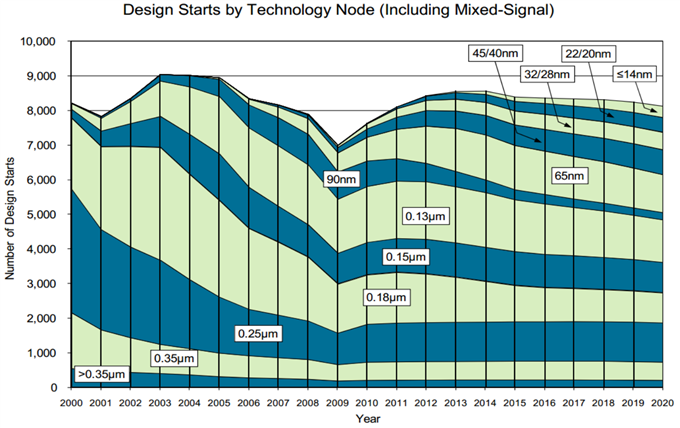

With an increase in the demand for high-performance, multi-tasking systems-on-chips (SoCs) for communication and computing, the power requirements for these electronic chips have also greatly increased. There has been a surge in the production of portable devices like mobile phones, laptops, tablets, and game boxes that support multiple applications and use various multimedia features extensively. Also, the usage time for these devices has gone up significantly, which also increases the power consumption for the devices.

However, the technology for batteries has not evolved at the same pace, and a common problem facing the designers is the battery life of the device. The battery technology is also not expected to change drastically in the near future due to various technology and safety reasons. This further accentuates the need to support low power devices. There is also a higher demand in the market for devices that provide for a better in-use and stand-by battery life.

Since the size of the designs is also getting smaller, there is a considerable need to optimize the usage of power to prevent unwanted increases in temperature due to the high activity of the power structures. There is a need for techniques that can conserve power to improve the energy efficiency without penalizing the performance of the design. Consequently, designers use various techniques that help in reducing the consumption of power, thereby increasing the battery life of the final product and also achieving a reduction in size of the battery.

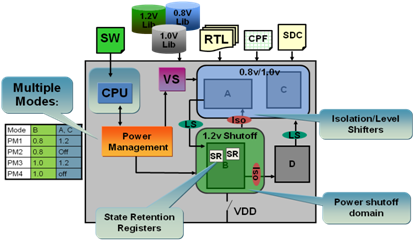

The use of Multi Supply Multi Voltage (MSMV) methodology is a very common practice to achieve these objectives. It includes the use of additional circuitry to control the power behavior of the cells in the design. Some of the basic techniques for this methodology include:

- Supplying a different set of voltage to different group of cells and thereby creating multiple power domains in the design.

- Using special low power cells to switch between different power supply levels in the design.

- Shutting off groups of cells during specific periods of circuit activity, while retaining the value stored, thereby creating different power modes of operation on the same design.

A Low Power Design Structure

This new requirement for low power designs provides a very interesting challenge to the EDA industry to address, since the issue is not an isolated tool problem and has an impact across various product lines.

Requirements for a Low Power Mixed-Signal Flow

While the usage of these low power techniques provide a certain benefit to the overall power consumption of the design, it also poses some serious challenges for the automation and support of this methodology, particularly for mixed signal and custom IC designs. Some of the important requirements to create a successful low power mixed-signal flow are:

- It should be possible to manage the design intent and the power intent separately so that each can be independently optimized during the IP creation.

- There should be a universally acceptable format/language to describe the power intent that can be deployed across the flow and supported by various EDA vendors

- It should be possible to package and export the power intent along with the design IP to be used with various tools.

- The flow should have the ability to automatically extract power intent for complex mixed signal and custom cells. As the design information is captured in schematics and not as a netlist, the power intent is already specified in most of the cases and it is very tedious to traverse the multiple levels of hierarchy to extract this information.

- It is equally necessary to verify the power description of the design which is automatically extracted by the tool along with the functionality before the IP is integrated in other designs.

- Also, when the IP is integrated in other designs, one has to make sure that the power intent defined for that instance is available for use in the top design

Low Power Mixed-Signal Capabilities in Virtuoso Schematic-XL

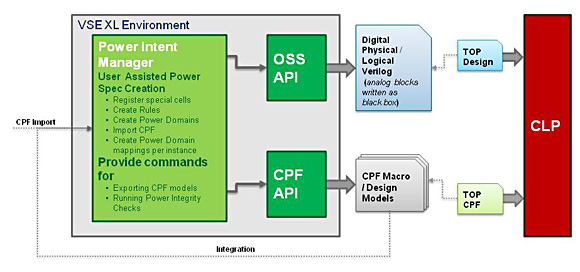

Keeping the above requirements in mind, the Virtuoso Schematic-XL suite offers a convenient and highly automated solution that supports the development of complex custom low power mixed-signal designs.

Automated Flow for CPF Extraction and VerificationMacro model and Design CPF extraction from schematics. The power intent for mixed signal and analog blocks is often built into the design, and can be a pretty complex task to describe independently. It often requires the designer to reverse-engineer the design to infer the power intent and capture it accurately. It is a very time consuming and error prone task in the current flows, as the designs can have a large hierarchy with blocks that are implemented by different designers. The new Power Intent Export Assistant (PIEA) in Virtuoso is able to automatically extract the power intent from custom/mixed-signal schematic and export it as a Common Power Format (CPF) specification. The tool is equally adept in extracting both a macro model CPF with the boundary ports to describe the low power interface of the block. It can also extract the full design level CPF for describing the complete power intent of the design.

To use this automatic CPF extraction capability in PIEA, the user just needs to do a one-time quick setup that involves providing the description of the devices, special low power cells and the supply nets. The extraction process is highly automated and manual intervention is minimally required to add and edit the power descriptions. The tool also facilitates the manual entry for the user to specify the power domains, nominal conditions and the power modes. A user can also configure the special library cells such as level shifters, isolation cells and power switches, and also define various rules associated with these cells in the design.

Automatic Extraction of Power Intent by PIEA

Integration of Digital IP blocks. The integration of digital IP blocks with low power specifications in mixed signal designs poses quite a few challenges. Since the block could be working in a different power domain than the other blocks in the design, it is necessary that the power structures required for the block are available in the top level design. This causes different power domains to exist in the design. Designers should make sure that the domain mapping happens correctly from the top to the block so that the correct power signals are propagated across the hierarchy. Also, there is a need to verify that the various special cells like the level shifters and isolation cells are inserted properly across power domain crossings to match with the power requirements of the top level design.

For IP blocks that have an associated CPF specification, Virtuoso Schematics-XL provides a mechanism to import the CPF file, with the power content, and associate it with its schematic or symbol view. The information in the CPF file is then used at the time of extracting the CPF for the top level schematic in which the IP is being integrated. This helps in not only an accurate power intent extraction for the top level, but also in the power verification of the mixed-signal design as the power intent of the digital IP has been captured in the top level CPF.

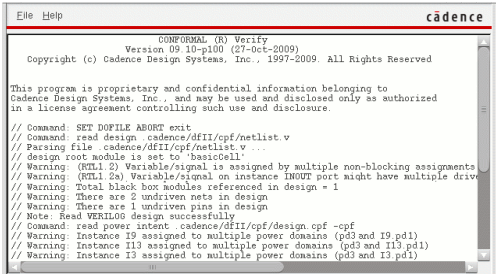

Verification of power intent. After the CPF file has been extracted, there is a need to verify that the extracted power intent specification is complete and accurately captures the power intent built into the design. To facilitate the verification of the power intent, PIEA provides an interface with the Cadence Conformal Low Power (CLP) tool.

This integration makes the power verification for custom and mixed-signal designs a very easy task. The designer does not have to worry about the generation of inputs for the CLP tool. The mixed-signal design is written out as a digital netlist with the analog cells marked as black boxes. The interface also automatically creates the run files for the CLP tool. CLP is launched in the background automatically and the CLP output window is brought up for the user to analyze the results. This verification can be done both at the chip or block level for the power intent.

The CLP output window after the verification of extracted CPF

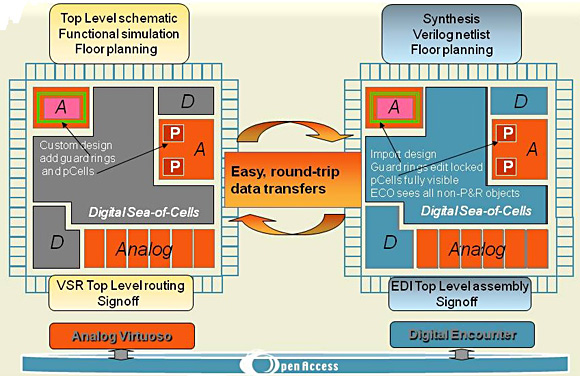

CPF import support for CDL Generation for LVS Verification. Since not all physical verification tools support Verilog and CPF as the input for layout-versus-schematic (LVS), it becomes necessary in the physical verification flow to create a CDL file for the digital blocks. The traditional methods have a limitation on the usage of both the logical and the physical Verilog for a complete schematic generation. Importing a logical Verilog file without the CPF results in a schematic with no power and ground connections, since there is no power description in the logical Verilog netlist. Similarly the import of physical Verilog requires that the standard cell libraries within Virtuoso have explicit PG terminals. This is not always possible, as most designers use the inherited connections to propagate the power and ground connections. But with the CPF import support in Virtuoso, it becomes easy to read in the logical Verilog and annotate the power intent from the CPF on to the schematic created. This creates the correct power and ground net information in the schematic to enable the export of CDL for the LVS verification.

CDL Generation flow for Digital Designs

Design portability and reuse. With all the features to support the read and write of CPF in the Virtuoso Schematics-XL suite, it can be used as a common ASCII format for low power description reusable across all tools. The same digital cell CPF used during the synthesis and physical implementation can be used for the top level CPF extraction in Virtuoso, where the digital block is instantiated. It can also be used to aid in the creation of CDL when the digital block has to be verified for LVS. The macro model CPF generated for analog designs can be used when these analog designs are instantiated in the digital world.

Concluding Remarks

The generation of CPF manually has long been a very time consuming and error prone task for mixed signal and custom designs. The capabilities in Virtuoso Schematics-XL provide a lot of productivity improvement for the low power flow. Also, the integration with Conformal makes sure that the CPF file content is accurate, complete and usable. These features, along with the support for CPF import, facilitate the ease of design portability and IP integration for capturing power intent.